4.1. System architecture The project

addresses a series of complex inter disciplinary – psychology – electronics – computer science – research for the development of a new concept for the therapy of speech impairments. The final product will be

composed of:

§ an intelligent system installed on the logopaed's desktop PC in his office that will

include a virtual visual and audio model of the human speech mechanism (absolute novelty on the European level)

§ an embedded system as the child's „therapeutically friend"

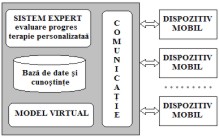

The two systems will be able to interconnect and share information. The architecture of the integrated therapy system is presented in figure 1. The intelligent system on the logopaed's PC will include:

a module for monitoring each child's progress and evolution including audio samples and an expert system that will inference by considering the audio and chronological data provided by the monitoring module.

Figure . The architecture of the integrated therapy system

During the therapy sessions directed by the logopaed, the instructions given to the child in what concerns the

improvements on his pronunciation require detailed explanations of the components involved in producing speech.

The current therapy uses rigid static models that are far away from the dynamical possibilities that a virtual visual

simulator posses, considering also the possibility of generating audio data function of different positioning of the elements part of the pronunciation process. The mobile device for personalized therapy targets two main objectives:

1. assisting the child for his homework therapy

2. download of the child's audio samples for further analyze by the logopaed or other trained personnel

By connecting the device to the deskopt PC, all the data sample will be stored inside a database that will serve later for enhancing the therapy system.

The functions of the mobile device are as follows:

§ preseting the child with the speech exercise

§

personalized interaction with the human user (based on age, sex, accent differences) during the therapy

§ evaluation of and encouraging the child's progresses

§ capturing audio samples of the child's exercises, communication with the logopaed's PC

We propose the development of a dedicated SoC device that will include a series of extensions for processing audio signals by means of specific firmware functions.

4.2. Intelligent system for personalized therapy The information systems with real time feedback that address phatological speech impairments are relatively recent

due firstly by the amount of processing power they require. The progres in computer science allow at the moment for the development of such a system with low risk factors.

Children pronounciation will also be used to enrich the existing audio database and to improve the current diagnosys system's performances.

The therapeutical guide is constructed under the direct guidance of the logopaed on the module installed in his

office. The specific advantages of an expert system addressing therapy of children with speech impairements are as follows:

§ patience, flexibility and unlimitted working time whenever the child desires

§ close presence – there are many children that communicate in a difficult manner with older people but

they may feel comfortable with a toy like device.

§ more precise explanations

§ the objective evaluation of the progress is hard to achieve for a human expert; instead, the system is

deisgned to analyze in an objective manner the evolution of each case on small time intervals.

The automatic personalized therapy system stores the prceise evolution and progress of each child and, by

adapting the exercises to each child's current level and progress, the speech therapy may take less time to achieve its result.

The experimental audio data will be collected and analyzed in the first phase of the research by specialists from the

Interscholar Regional Logopaedic Center of Suceava. We are also considering the existing research that will provide

for an excellent starting point in estimating models that will offer an appropriate visual model for the pacient.

4.3. Mobile embedded system architectureThe main requirements for a dedicated SoC architecture imply two multimedia features: audio (recording, compression and playing), graphic (friendly

interface) and other processing facilities for evaluation procedures, defining a short term therapy and PC communication. For those aspects we propose the following structure:

§ Xilinx Microblaze 32 bit microprocessor

§

Ethernet interface for communication with PC

§ UART interface and JTAG interface. Both of then are used for debug

§ SDRAM/FLASH interface used for data and program storage

§ Graphical interface VGA/LCD

§

Audio in/out interface

§ HMM (Hidden Markov Model) interface

|

Figure 2. Mobile embedded system architecture |

4.4. A Phono Articulator Virtual Reality Model

In therapy is very important to present on a physical 3D model of the mouth cavity of all hidden movements that

influence the pronunciation. We can identify at least 5 points of view for illustrating the difference s between the child

and correct pronunciation, all of that in concordance with the complexity of articulator system: frontal, side, palatal,

a binary semaphore that shows the direction of air in mouth cavity, a binary semaphore that shows the direction of air in nasal cavity.

The feedback regarding the made articulation can be realized with a mini-profile with a 2D or 3D border of tongue, that show the tongue in different positions, different scale and different points of view. The main objectives for realize this virtual model are the following:

§

A 3D model for the elements that interfere in pronunciation, allowing the real time video demonstration of correct / wrong positioning.

§ The phoneme generation based on the current position of virtual model elements.

§ Natural interaction between logopaed or patient and virtual model through:

- specification of some flexion points of mouth cavity elements

- audio-video comparing regarding current positioning

A virtual reality system that can offer a physical 3D model of the mouth cavity as well as a version that makes use

of transparencies so that tongue flexions, teeth and palatal cavity positions can be visualized proves to be of a huge

importance in the speech therapy field. [6,7]. Using a parametric physical model with regards to the elements that

generate speech allows the speech therapist to demonstrate the correct position of these elements for normal, correct pronunciation of different phonetic sequences.

Special aspects must be considered with regards to implementing the virtual environment::

§ each model must fulfil real-time animation constraints

§

the models must be deformable to assure interaction simulation between different articulatory elements

and the effects they have on emitted sounds (again, real-time animation constraints)

§

natural interaction with the virtual environment

The virtual model must assure a number of quality requirements so that it offers the user the feeling of a successful experiment. 4.5. The actuality and complexity of project

The utilization of an auxiliary procedure in logopaedic therapy specific to Romanian language.

Showing the results at the final of the exercise set, in a way that the child could see the self progress and errors during therapy activity.

The phono-articulator simulator presents a high level of actuality and complexity that make it a top European

research. Realizing an embedded mobile system for pronunciation therapy of children presents a high level of

actuality both hardware and software implementation (the national and international biographical references shows that such a device does not exist).

The fact that the system have as an objective to treat the pronunciation disorders in Romanian language gives the

system another actuality feature. The high degree of complexity of the project results from the high number of

different research areas involved: artificial intelligence ( learning expert systems, data mining techniques, pattern

recognition), virtual reality, digital signal processing, digital electronic (VLSI), computer architecture (System on

Chip, embedded device), psychology ( evaluation procedures, therapeutic guide, experimental design for validation). 4.6. The used methodology and techniques

The development of a virtual 3D model that considers the elements producing speech, the interaction between them

and the effects that different flexions have on the emitted sound represents a complex task and, considering the number of existing modelling approaches, a series of questions have to be answered:

Which is the best model for each articulatory element (tongue, teeth, lips) that contributes to speech generation?

What is the appropriate complexity level for each model in order to assure the demonstrative effect from the speech therapy point of view?

How the different models interact and how can the effects of the interaction are modelled? Designing and realization of intelligent system for personalized therapy

will require researches in the area of auto learning expert system, that starts with an initial database with knowledge regarding learning and grow up

permanently using data mining and pattern recognition techniques for knowledge extraction from the child evolution and for indicating an optimal therapeutically path. For the

mobile embedded system realization, we think about a computer science areas that grow up in importance

in the last decade: System on Chip development (SoC). The success of this type of circuits is give by the multiple

functionalities, low costs, small numbers of components, the integration of other electronic components in a single one and high protection against reverse engineering attacks.

The psychotherapeutic research will follow three steps:

§ An experimental stage regarding the application of pre-test – post-test research methodology (through

initial and final evaluation) for the comparative analyze between classical and assisted therapeutically ways.

§ A diagnostic stage, supposing that we know the attitude of the teachers and the parents regarding the use of a mobile embedded device in dyslalia therapy;

§

An integrative stage, regarding the identification of assisted therapy development principles and methods based on previous two stages.

Signal processing techniques and methods are:

§ voice recording techniques, pattern sampling techniques, audio compression techniques using ADPCM

(Adaptive Differential Pulse Code Modulation) and MP3 algorithms.

§

techniques for communicating between the hardware device and PC, using Ethernet (TCP/IP) and URAT (serial port ) interfaces

The therapeutically guide stored in knowledge base consist in:

§ the muscular of phono-articulator system development methods

§ the rhythm of respiration controlling methods

§ phonematic hear development methods (the onomatopoeic pronunciation, rhythmic pronunciation exercise, different phoneme pronunciation)

The experimental design for system validation Subiecži: 90 The subjects will be 90 children with pronunciation disorders with the following distribution::

Factors |

Sigmatism |

Rotacism |

Polymorph Dyslalia |

Classical therapy |

15 children |

15 children |

15 children |

Assisted therapy |

15 children |

15 children |

15 children |

The research will start with a pre-test stage consisting in an initial diagnostic of all children from sample (using specific diagnostic techniques). The same instruments will be applied in post-test stage.

4.7. Required equipments

Computers, audio recording instruments, hardware platform for development of the mobile embedded device (a development kit with FPGA Xilinx (Spartan 3 or Virtex)). Software

The software instruments for SoC are: Xilinx ISE / Xilinx Embedded Development Kit / operating system ucLinux / C Compiler: gcc for microprocessor Microblaze / Xilinx System Generator for DSP.

Real time animation of the elements that contribute to speech and of the interactions between them is going to be handled with specialized libraries, commonly used in the graphic community:

§ Open GL – open source

library used for developing 2D/3D interactive graphical applications. The library presents a few major advantages: industrial support that ensures the existence of a OpenGL standard,

platform and operating system independence, portability, evolution and continuous development (the OpenGL Architecture Review Board independent consortium)

§

nVIDIA Cg (C for Graphics) – specialized graphics language developed by nVIDIA that allows optimum

usage of video cards performances, leading to an enhanced graphical experience

The contribution of each partner A3.1 form – Realization plan / expected results |